When the Cloud Sneezes: Lessons from the AWS October 2025 Outage

The Day the Internet Coughed

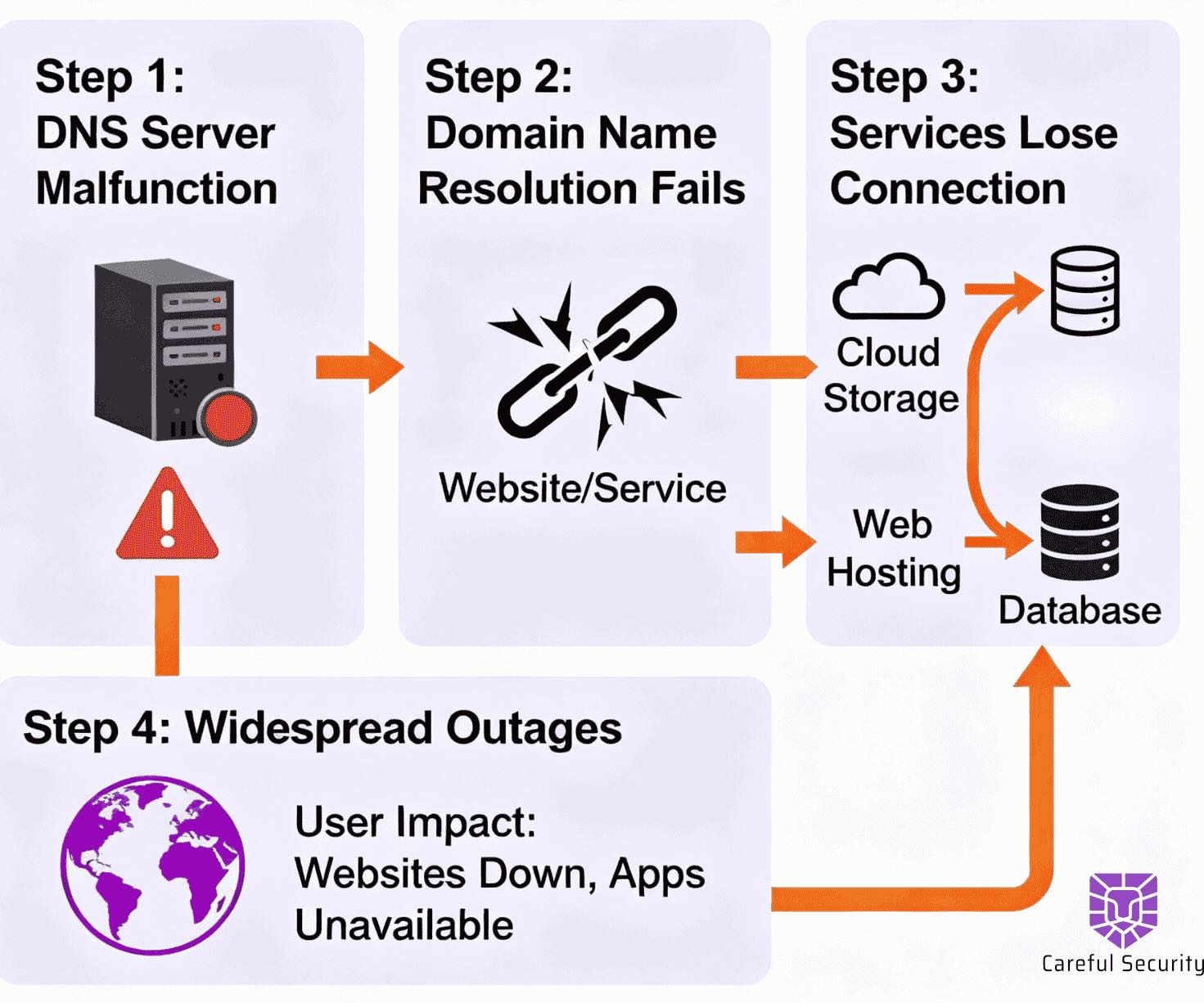

On October 20, 2025, Amazon Web Services’ US-EAST-1region—the beating heart of the modern internet—experienced a major outage that rippled across the web. A silent DNS glitch in AWS’s internal systems cascaded into hours of disruption for thousands of companies and millions of users.

DynamoDB couldn’t resolve endpoints. Lambda functions stalled. EC2 instances refused to launch. For roughly 15 hours, global appssuch as Snapchat, Venmo, Zoom, Canva, and Ring cameras went offline.

The impact was immediate and severe—billions in frozen transactions and service interruptions underscored just how fragile “the cloud”can be when a single layer fails.

What Really Happened

The root cause: a race condition in AWS’s automated DNS management system. Two internal processes clashed—one updating DNS records,another cleaning up stale entries. The result was an empty record for a key DynamoDB endpoint.

That single missing entry propagated across the internet like digital wildfire, severing service connections globally. Engineers re stored operations in about fifteen hours, but downstream effects lingered for days.

The Real Impact

AWS hosts over one-third of the world’s cloud workloads.When it stumbles, the internet feels it. The outage disrupted critical sectors including finance, healthcare, communications, and consumer services. Even some cybersecurity tools lost visibility during the event.

This wasn’t just downtime—it was a resilience wake-up call for every business operating in the digital economy.

Five Lessons from the AWS Outage

1. Resilience is in the Architecture

Even hyperscalers fail. If your continuity plan begins and ends with “we’re on AWS,” you don’t have a resilience strategy—you have a dependency. Action: Design multi-region failover, test chaos scenarios, and assume your provider will eventually fail.

2. The Cloud Is Human

Automation doesn’t eliminate error; it amplifies it. Every outage is the result of a system working exactly as designed, just in the wrong context. Action: Balance automation with human oversight and continuous red-team testing.

3. Invisible Dependencies = Hidden Risk

Many organizations didn’t even realize they depended on DynamoDB until it went down. Shadow dependencies—third-party APIs, SDKs, SaaS connectors—create unseen vulnerabilities. Action: Map and monitor your service dependencies continuously.

4. Incident Response ≠ Disaster Recovery

Outages expose organizational weaknesses as much as technical ones. When communication fails, trust evaporates.

Action: Prepare crisis playbooks and communication templates before disaster strikes.

5. Cloud Trust Is Earned, Not Assumed

AWS responded responsibl by diagnosing, owning, and explaining the failure. But the broader lesson is ownership. If your business depends on cloud infrastructure, resilience must be part of your governance.

Build Like Everything Can Break

The AWS outage reminded the industry that “the cloud” is not invincible. It’s human-built, complex, and fallible. True resilience comes not from hoping systems stay online, but from planning for when they don’t.

The cloud isn’t magic. It’s just someone else’s datacenter hosting your assets. Ask yourself: if US-EAST-1 goes dark again, does your business blink—or black out?

CISO Takeaways

- Redundancy is essential

- Implement multi-region fail-over

- Map dependencies

- Maintain real-time asset and service inventory

- Automate with guardrails

- Include human validation in automated workflows

- Simulate failure

- Run chaos engineering and tabletop exercises

- Communicate fast

- Use pre-approved crisis playbooks

Security is also about keeping the lights on when good systems fail. When resilience becomes part of security culture, outages turn into opportunities for improvement. Build for failure. Lead with resilience.

Cybersecurity Leadership for Your Business

Get started with a free security assessment today.

.avif)

.avif)

.svg)

.svg)

.svg)